Recognition of Hand Movement for Paralytic persons Based on a neural network.

CODE FOR MAKING A RECOGNITION OF HAND MOVEMENT FOR PARALYTIC PERSONS BASED ON A NEURAL NETWORK IS AS:-

__author__ = 'manoj' import cv import math import cv2 import numpy as np import csv import ctypes MOUSE_LEFTDOWN = 0x0002 # left button down MOUSE_LEFTUP = 0x0004 # left button up MOUSE_RIGHTDOWN = 0x0008 # right button down MOUSE_RIGHTUP = 0x0010 # right button up MOUSE_MIDDLEDOWN = 0x0020 # middle button down MOUSE_MIDDLEUP = 0x0040 # middle button up slidemove=0 textbelow=['one','Show V','three','Rock on!','punch you!','palm open','Cick Mouse'] input_layer_size=900 # M * N of input image hidden_layer_size=25 num_labels=7 #no of class to recognize Theta1 = np.zeros([hidden_layer_size,input_layer_size+1]) Theta2 = np.zeros([num_labels,hidden_layer_size+1]) def make_formatted_csv(): first="Theta1.csv" second="Theta2.csv" global Theta1,Theta2 i=0 for line in csv.reader(open(first)): for x in range(input_layer_size+1): Theta1[i,x]=float(line[x]) i+=1 #----------------------- i=0 for line in csv.reader(open(second)): for x in range(hidden_layer_size+1): Theta2[i,x]=float(line[x]) i+=1 def sigmoid(z): g = 1/(1+np.exp(-z)) return g def predict(X): global Theta1,Theta2 m = X.shape[0] #print X #print X.shape #num_labels = Theta2.shape[0] #p = np.zeros([X.shape[0],1]) #Theta1 = np.matrix(np.random.rand(hidden_layer_size,input_layer_size+1)) #Theta2 = np.matrix(np.random.rand(num_labels,hidden_layer_size+1)) #a=np.ones([56,2]) #np.hstack((np.ones([a.shape[0],1]),a)) h1=sigmoid(np.matrix(np.hstack((np.ones([m,1]),X))) * Theta1.transpose()) h2=sigmoid(np.matrix(np.hstack((np.ones([m,1]),h1))) * Theta2.transpose()) pred= h2.argmax(1) return (pred+1) make_formatted_csv() def contour_iterator(contour): while contour: yield contour contour = contour.h_next() myfont = cv.InitFont(cv.CV_FONT_HERSHEY_SIMPLEX, 1.0, 1.0, 0, 3, cv.CV_AA) (fstx, fsty)=(0,0) INIT_TIME = 10 B_PARAM = 1.0 / 30.0 zeta = 10.0 capture = cv.CaptureFromCAM(1) _red = (0, 0, 255, 0) _green = (0, 255, 0, 0) image = cv.CreateImage((640, 480), 8, 3) img1 = cv.CreateImage((640, 480), 8, 3) av = cv.CreateImage(cv.GetSize(image), cv.IPL_DEPTH_32F, 3) sgm = cv.CreateImage(cv.GetSize(image), 32, 3) lower = cv.CreateImage(cv.GetSize(image), 32, 3) upper = cv.CreateImage(cv.GetSize(image), 32, 3) tmp = cv.CreateImage(cv.GetSize(image), 32, 3) dst = cv.CreateImage(cv.GetSize(image), 8, 3) msk = cv.CreateImage(cv.GetSize(image), 8, 1) skin = cv.CreateImage(cv.GetSize(image), 8, 1) skin = cv.CreateImage(cv.GetSize(image), 8, 1) handlarge = cv.CreateImage(cv.GetSize(image), 8, 1) handorig = cv.CreateImage(cv.GetSize(image), 8, 3) copyskin = cv.CreateImage(cv.GetSize(image), 8, 1) firstcopyskin = cv.CreateImage(cv.GetSize(image), 8, 1) circleimg = cv.CreateImage((640, 480), 8, 1) destblobimg = cv.CreateImage((640, 480), 8, 1) drawimage = cv.CreateImage(cv.GetSize(image), cv.IPL_DEPTH_8U, 3) savehandimage = cv.CreateImage((30, 30), cv.IPL_DEPTH_8U, 1) cv.SetZero(av) for i in range(0, INIT_TIME): image = cv.QueryFrame(capture) cv.Flip(image, image, 1) cv.Acc(image, av) cv.ConvertScale(av, av, 1.0 / INIT_TIME) cv.SetZero(sgm) for i in range(0, INIT_TIME): image = cv.QueryFrame(capture) cv.Flip(image, image, 1) cv.Convert(image, tmp) cv.Sub(tmp, av, tmp) cv.Pow(tmp, tmp, 2.0) cv.ConvertScale(tmp, tmp, 2.0) cv.Pow(tmp, tmp, 0.5) cv.Acc(tmp, sgm) cv.ConvertScale(sgm, sgm, 1.0 / INIT_TIME) prevfst=(0,0) while True: image = cv.QueryFrame(capture) cv.Flip(image, image, 1) cv.Convert(image, tmp) cv.Sub(av, sgm, lower) cv.SubS(lower, cv.ScalarAll(zeta), lower) cv.Add(av, sgm, upper) cv.AddS(upper, cv.ScalarAll(zeta), upper) cv.InRange(tmp, lower, upper, msk) cv.Sub(tmp, av, tmp) cv.Pow(tmp, tmp, 2.0) cv.ConvertScale(tmp, tmp, 2.0) cv.Pow(tmp, tmp, 0.5) cv.RunningAvg(image, av, B_PARAM, msk) cv.RunningAvg(tmp, sgm, B_PARAM, msk) cv.Not(msk, msk) cv.RunningAvg(tmp, sgm, 0.01, msk) cv.Copy(msk, skin) cv.Erode(skin, skin, None, 1) cv.Erode(skin, skin, None, 1) cv.Dilate(skin, skin, None, 1) cv.Dilate(skin, skin, None, 1) storage = cv.CreateMemStorage(0) firstcopyskin = cv.CloneImage(skin) contour = cv.FindContours(firstcopyskin, storage, cv.CV_RETR_CCOMP, cv.CV_CHAIN_APPROX_SIMPLE, (0, 0)) blobarea = 0 (rectx, rexty, rectw, recth) = (0, 0, 0, 0) for c in contour_iterator(contour): PointArray2D32f = cv.CreateMat(1, len(c), cv.CV_32FC2) for (i, (x, y)) in enumerate(c): PointArray2D32f[0, i] = (x, y) # Draw the current contour in gray #gray = cv.CV_RGB(100, 100, 100) #cv.DrawContours(image, c, _red ,_green,10) #cv.DrawContours(image,c,cv.ScalarAll(255),cv.ScalarAll(255),100,2,8) if cv.ContourArea(c) > blobarea: blobarea = cv.ContourArea(c) rectx, rexty, rectw, recth = cv.BoundingRect(c) #print rect#x,y,w,h if rectw > 0 or recth > 0: if recth > 190: recth = 190 #cv.SetImageROI(image,(rectx,rexty,rectw,recth)) #cv.ShowImage("Source", image) #cv.ResetImageROI(image) #else: # cv.ShowImage("Source", image) hand = (rectx, rexty, rectw, recth) cv.Zero(handlarge) cv.Zero(handorig) cv.SetImageROI(skin, hand) cv.SetImageROI(handlarge, hand) cv.Copy(skin, handlarge) cv.ResetImageROI(skin) cv.ResetImageROI(handlarge) cv.Dilate(handlarge, handlarge, None, 1) cv.Erode(handlarge, handlarge, None, 1) cv.Copy(image, handorig, handlarge) #cvDrawRect(image,cvPoint(hand.x,hand.y),cvPoint(hand.x + hand.width , hand.y+hand.height),CV_RGB(255,0,0)) copyskin = cv.CloneImage(handlarge) moments = cv.Moments(handlarge) try: mom10 = cv.GetSpatialMoment(moments, 1, 0) mom01 = cv.GetSpatialMoment(moments, 0, 1) area = cv.GetCentralMoment(moments, 0, 0) posX = int(mom10 / area) posY = int(mom01 / area) #cv.Circle(image,(posX,posY),3,cv.CV_RGB(0,255,0), 3, 8, 0 ) except: pass contour = cv.FindContours(copyskin, storage, cv.CV_RETR_CCOMP, cv.CV_CHAIN_APPROX_SIMPLE, (0, 0)) blobarea = 0 larrect = (0, 0, 0, 0) larseq = 0 for c in contour_iterator(contour): PointArray2D32f = cv.CreateMat(1, len(c), cv.CV_32FC2) for (i, (x, y)) in enumerate(c): PointArray2D32f[0, i] = (x, y) if cv.ContourArea(c) > blobarea: blobarea = cv.ContourArea(c) larrect = cv.BoundingRect(c) larseq = c if larrect[2] > 0 or larrect[3] > 0: cv.Rectangle(image, (larrect[0], larrect[1]), (larrect[0] + larrect[2], larrect[1] + larrect[3]), cv.CV_RGB(255, 0, 0), 2) cv.DrawContours(image, larseq, cv.ScalarAll(255), cv.ScalarAll(255), 100, 2, 8) cv.DrawContours(handorig, larseq, cv.CV_RGB(255, 0, 0), cv.ScalarAll(255), 255) hull_storage = cv.CreateMemStorage(0) storage3 = cv.CreateMemStorage(0) hulls = cv.ConvexHull2(larseq, hull_storage, cv.CV_CLOCKWISE, 0) defectarray = cv.ConvexityDefects(larseq, hulls, storage3) farthestdistance = 0 for defect in defectarray: start = defect[0] end = defect[1] depth = defect[2] ab = start dist = math.sqrt((float)(posX - ab[0]) * (posX - ab[0]) + (posY - ab[1]) * (posY - ab[1])) if (dist > farthestdistance and posY > ab[1]): farthestdistance = dist fstx = ab[0] fsty = ab[1] cv.Line(image, start, depth, cv.CV_RGB(255, 255, 0), 1, cv.CV_AA, 0) cv.Circle(image, depth, 5, cv.CV_RGB(0, 255, 0), 2, 8, 0)#green cv.Circle(image, start, 5, cv.CV_RGB(0, 0, 255), 2, 8, 0)#blue cv.Line(image, depth, end, cv.CV_RGB(255, 255, 0), 1, cv.CV_AA, 0) fst = (fstx, fsty) #for hand drawing cv.Line(drawimage,fst,prevfst ,cv.CV_RGB(0,0,255),5) cv.Circle(drawimage, fst, 5, cv.CV_RGB(0,0,255), -2, 8,10) prevfst=fst cv.ShowImage("drawing image",drawimage) cv.Circle(image, ( posX, posY ), 5, cv.CV_RGB(0, 255, 0), 5) cv.Circle(handorig, ( posX, posY ), 5, cv.CV_RGB(0, 255, 0), 5) rad = math.sqrt((float)(posX - fst[0]) * (posX - fst[0]) + (posY - fst[1]) * (posY - fst[1])) cv.Line(image, fst, ( posX, posY ), cv.CV_RGB(255, 255, 0), 4) cv.Circle(image, ( posX, posY ), int(rad / 1.5), cv.CV_RGB(255, 255, 255), 6) cv.Line(handorig, fst, ( posX, posY ), cv.CV_RGB(255, 255, 0), 4) cv.Circle(handorig, fst, 5, cv.CV_RGB(0, 0, 255), 5) #nos of fingers cv.Zero(circleimg) cv.Zero(destblobimg) cv.Circle(circleimg, (posX, posY ), int(rad / 1.5), cv.CV_RGB(255, 255, 255), 6) cv.And(handlarge, circleimg, destblobimg) #cv.ShowImage("circleimg",circleimg) #cv.ShowImage("blobimg",destblobimg) contour = cv.FindContours(destblobimg, storage, cv.CV_RETR_CCOMP, cv.CV_CHAIN_APPROX_SIMPLE, (0, 0)) fingnocnt = 0 for c in contour_iterator(contour): fingnocnt += 1 if fingnocnt >= 1: lab = str(fingnocnt - 1) else: lab = str(0) cv.PutText(image, lab, (10, 50), myfont, cv.CV_RGB(255, 0, 0)) ## #save the hand ## if (fingnocnt == 2): ## imageno=imageno+1 ## ## #imagename=str(fingnocnt-1)+"/ges"+str(imageno)+".bmp" ## #imagename="5/ges" +str(imageno)+".bmp" ## ## imagename="ges" +str(imageno)+"d.bmp" ## cv.SetImageROI(handlarge,larrect) ## cv.Resize(handlarge,savehandimage) ## cv.Dilate(savehandimage,savehandimage,0,1) ## cv.Erode(savehandimage,savehandimage,0,1) ## cv.SaveImage(imagename,savehandimage) ## cv.ResetImageROI(handlarge) cv.SetImageROI(handlarge,larrect) cv.Resize(handlarge,savehandimage) #cv.Dilate(savehandimage,savehandimage,None,1) #cv.Erode(savehandimage,savehandimage,None,1) cv.SaveImage("my.bmp",savehandimage) cv.ResetImageROI(handlarge) cvimage = cv2.imread("my.bmp",cv2.CV_LOAD_IMAGE_GRAYSCALE) b=np.matrix(cvimage,np.float64,True) a=np.reshape(b,[1,b.shape[0]*b.shape[1]],order='F') astd=np.std(a,1) + 0.0000001 d=(a-np.mean(a,1))/astd pred=predict(d) #predtext="Using Neural Network: "+str(pred) predtext="Using Neural Network: "+textbelow[pred-1] cv.PutText(image,predtext,(10, 440), myfont, cv.CV_RGB(255, 0, 0)) if pred==4: cv.SetZero(drawimage) if pred==5: cv.SaveImage("dynamic.bmp",drawimage) cv.PutText(image,"image saved",(300, 50), myfont, cv.CV_RGB(255, 0, 0)) slidemove+=1 if slidemove % 30 ==0: #ctypes.windll.user32.SetCursorPos(500,500) #ctypes.windll.user32.mouse_event(MOUSE_LEFTDOWN ,0,0,0,0) #ctypes.windll.user32.mouse_event(MOUSE_LEFTUP,0,0,0,0) slidemove=0 cv.ShowImage("largehand only-binary color", handlarge) cv.ShowImage("largehand only-original color", handorig) cv.ShowImage("original image", image) cv.ShowImage("all foreground", skin) key = cv.WaitKey(1) if key == 27: break cv.DestroyAllWindows() del capture

ITS THEORY IS AS FOLLOWS:-

1. Introduction

A sign language is a language which, uses manual

communication and body language to convey

meaning. This can include combining hand shapes,

orientation and movement of the hands, arms or

body, and facial expressions to fluidly express a

speaker's thoughts . So ,its important language for

Persons with disabilities . Patients monitoring

techniqueuses image processing and computer

vision to produce understanding sign .It keep track

of various hands parameters and provides data to

analysis it and monitor system. It is depend on

gestures language Interpreter of the patients (i.e

interaction descriptor ).This utility system have

general facility due to its depend on monitoring the

patients in different regions. If a patient ask to eat

or something else , the system helps him to achieve

what he wants, even if this request out of reach .It is

used to express of patient wishes [1] . This method

is the easiest way to help the patients and what they

need , when the patient unable to walk due to

stroke, and what feeling in complete paralysis,

except his hands. Then the system completely

depend on hands movements.The details of system

consist of digital camera connects with active

system to monitor closely the SP.The idea of the

system is to monitor the patient's hands. Basically,

the movement of the patient will be interpreted and

compared with the database , depend on special

movement build in the system. The system reject

any gestures not exist (contrary to the rules). The

error message are displayed when the patient's

gesture is out of system rules[2][3].

2. Literature Review

Basically, the unwell person is weak that can’t

press any button and most of them can’t walk and

many researchers with researches to helpthese

persons to do his daily jobs. Many interesting

applications of hand gesture recognition have been

introduced in many latest years. Below , review

some of them:

Mitra and Acharya have done excellent work with

hand gesture recognition where user made gesture

and receiver recognized them[15]. Ahn, Kim,

Kwak, J. Kim and D. H. Kim have perfect research

in this area that developed augmented interface

table using infrared cameras for pervasive

environment[16]. Chaudhary and Raheja has

described designing for intelligent systems in his

work[17]. Kuno, Sakamoto, Sakata, and Shirai

write in this subject to find a solution to the

problem by use of restricted backgrounds and

clothing like dark gloves or wearing a strip on wrist

[18]. Wu and Huang has been extracted hand region

from the scene using segmentation techniques[19].

Vezhnevets, Sazonov, and Andreeva describes

many useful methods for skin modeling and

detection[20].

3. System Methodology

System detection and recognition ofHG is a

classification system which distinguishesHG of

allowed gestures of SP.This methodology uses

processing of digital image technique for the

classification purpose.The input system is specific

HG Images saved in private database which are in

digital format. This database can be add new

images of HG by agreement of doctor according

patients need . This system depends on the denoise

(noise reduction or removal) images, where the

images are processed in advance , even before

adding them to the assigned database . The

proposed system depend on GUI or called vision

interface [4][5][14].

Vision interfaces are based on feasibility and

popularity because the computer machine is able to

communicate with user using camera or webcam. In

this way, the user can be able to give commands

(hands commands or gestures) to the computer by

just showing some actions (hand movements) in

front of the webcam without typing keyboard and

clicking mouse button. Then , the interaction is

occur between user and computer [6].

User interface for the hand gesture recognition was

developed using MATLAB GUI (Graphical User

Interface). During run the application handles video

streaming from the webcam and timing of frame

processing. Frame rate can exceed 20 fps which is

more plenty for reliable human gesture detection.

Hand movements are the key points in hand gesture

recognition modeling in the human hand model.

This approach is based detection and recognition on

applying a learning model to reconstruct the hand

model[6][10].

4. Artificial Neural Network

ANN is a computational model which similar to

the way human brain works. Human brain is

consist of billions of neurons interconnected by

synapses, the neural networks can be form as a

network of computational nodes connected with

each other through links. This networks needs to be

trained continuously with set of data before it can

be used to produce the desired output. Because of

neural network are adaptive nature , the structure of

these networks can changed easily depend specific

information that that enter to the network during the

learning phase. The links these networks are

assigned during training phase . ANN are used to

model complex relation between input signals and

output signals .Neural networks can find various

patterns in input signals [7] . neural networks can

be very helpful in modeling complex systems due

to its flexible construct ,that is to be very difficult in

traditional modeling. ANN are very useful in

image processing fields ,speech recognition ,

pattern recognition and various that requirement

information extraction. neural network can be

classify into two main categories : the input and

the output. ANN is consist of many nodes or

neurons called processing elements (PE) and

interconnect between them, set of nodes represent

the input nodes of neural network that take data

from external environment.A set of nodes represent

output nodes that produce intermediary hidden

nodes or not. These hidden nodes connect with

each other by links called connections, connect with

input or output nodes or other hidden nodes.Any

ANN consist of 3 main parts: (1) Input layer nodes

(2) Output layer nodes (3) Hidden layer nodes

(internal nodes) [8],[9] . Figure (1). show the block

diagram of neural network with 12 nodes: 3 nodes

in the input layer, 7 nodes in the hidden layer and 2

nodes in the output layer. The neural network can

be used in actual production environment . The

process of training of artificial neural networks is

called learning of neural network, which is

generally done by one of three ways: (a)Supervised

Learning (b)Unsupervised Learning

5. Image Database

The HG images, which are used for training and

testing the neural network within the system HG

Recognition (HGR) , represent a various gestures

that help a SP daily.There are a different kinds of

data image , including medical images, and natural

images and other. These images intended for

training purposes and to extract the required results.

Other group ofHG images are used to training in

supervised neural networks to obtain results, where

they are taking these pictures through the internet or high resolution digital cameras or webcam in

different sizes and angles[11].

There are two main operation are made on the

training images: (1) Images are converting to

grayscale images , and (2) Uniform of the

background images. The updating of Image

database are continuous , then the supervised

neural network training on different types of

images. This means that database images is a

dynamic, not static [12] . There are various types of

HG, below in the figure (2), the most important HG

using by SP.

6. Procedure of work

A main objective of Hand Gesture Recognition

(HGR) is the ability to use HG for the general

applications, aiming for the natural interaction

between the human and computer.

Supervised Neural Networks (SNN) are used for

gesture recognition and used for not fully explored

gesture . The detection of human gestures in the

human hand structure is an important issue in most

hand model studies and in some gesture recognition

systems[13]. HG have many possibilities in the

field of Computer Vision and Human machine

interaction. Gesture recognition could be based on

probability, if the background is not fixed or image

has other same kind of objects that would also lead

to Inaccurate results. There are three main stages in

Hand Gesture Recognition Process (HGRP):(1)

Image Capture Phase (ICP).(2) Gesture Extraction

(Feature Extraction) and(3) Gesture Recognition

(GR).These stages are contain the below :(1)

Designing of Algorithm.(2) Speed of processing.

(3) Architecture of system and(4) Interface

Video.The activities of SP can detect and

recognized by the camera or Webcam in the

specific area . The results of these cameras can be

analyzed and used to control the operation of

devices in these application environments. we can

analyze all the result of camera , histogram it and

controlled on the operate of devices in the different

areas e.gCar, a Plane, Room or Hospital and so on.

Away of the scientific complexities, we focused on

2D static Hand Gesture Recognition (HGR) only.

The system started when the image captured from

Camera or Webcam. The system dealing with high

resolution images, because low resolution images

lose many useful information when captured by

camera. Next, the Preprocessing are begin to get the

detection results of image processing . Gesture

Histograms are used to compare test Hand

Gestures (HG) with the actual images database .

System used six HG are important and commonly

used to training the supervised neural network .The

system is very easy and flexibility . The system was

built to train six HG using neural networks. So you

can add other HG to the system help SP in daily

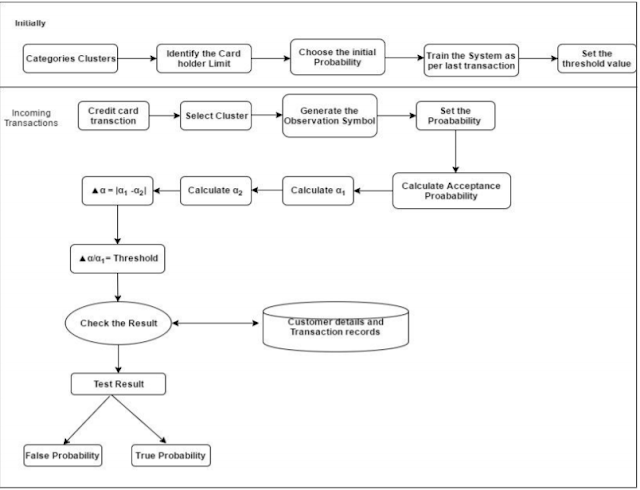

jobs .The proposed algorithm of SPHG System

have the below steps and showing in details in the

figure (3):

Step1. Image capture from high resolution camera

or webcam.

Step2. Images resizing 150 ×140 pixels fit (the

desired size).

Step3. Edges detection (detect boundaries of HG

).In this step we are using 2- filters. For the n

direction n=[0 -1 1]. For the m direction m= [0 1 -

1].

Step4. Divide two image matrices resulting dn and

dm element by element and then taking the atan

(tan−1) to get gradient orientation.

Step5 . Re-arrange the blocks of inputting image

into columns by calling MATLAB function im2col

. This is optional step .

Step6. Converting the column matrix with the

values to degrees. This way we can scan the vector

for values ranging from 0 to 90. This can also be

seen from the orientation histograms where values

come up only on the first and last quarter.

During the training and learning in the proposed

neural network, the Neural Network learning

beginwith putting a HG image as iterations one by

one, each iteration consist of 8×8 matrix elements

(PE) by multi iterations to the suggested net . The first iteration put to the suggested net as an input

block and applying feedforword NN(FFNN). First

iteration input compare with desired

output(supervised) if match or not, if there is an

error(defects) ,then adjust weights of each node

(PE) by applying backpropagation(BpNN) for the

same iteration until arrive to same desire output (i.e

during the training process these weights are

adjusted to achieve optimal accuracy and coverage)

.

.

Figure 3. SPHG System Flowchart

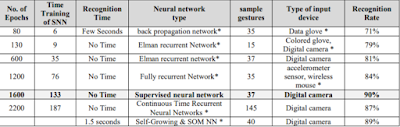

Table 1.below referred tothe processing of time based Epochs with different types of current SNN &the

compression between the previous neural networks processing.Note that , (*) referred to previous researches articles.

7. Conclusion

In this papers, we present a new proposed model for

SP, where this patients cannot move their bodies

except hands. We build this system to read hands

movements and translate this movements to

requests carried out by doctors.The future HGDR is

very bright especially for disabled patients and SP .

This technique is natural and easy way to make a

contact with a machine (simulation) , where the

user not needing the training phase . This technique

can be made a wireless technique, especially

faraway patients . At this time , this technique can

be controlled remotely. So in any case of disaster

like fires or earthquake , if the person is in danger

and can’t get a help , he can show HG syntax to the

system that will interpret it and send it as a signal to

transceiver nearby and it will forward the signal

further to the rescue team in the control room. This

system can be development by adding Global

Positioning System. This way help the persons to

detect there locations by rescue team.

Comments

Post a Comment